The Problem with Real World Benchmarking

Welcome to the real world. Not Middle Earth, Arrakis or Westeros, but the authentically mundane real world. This is where ordinary people sit in front of ordinary computers running everyday software, and in the 2012 real world these ordinary people will be happily building 35,000-row spreadsheets, while using Internet Explorer 8 or Firefox 3.6.8 to browse the Web. Wait, what?To be more specific, welcome to Planet Benchmark's definition of real world, which basically means the benchmark uses real software to gauge a computer’s performance, rather than a bunch of synthetic tests that churn out an arbitrary number. We have our own real world benchmark suite (and yes we know it’s old, and there are indeed plans afoot to update it), which uses readily available open source apps to try to replicate various tasks for which bit-tech and Custom PC readers might use their PCs.

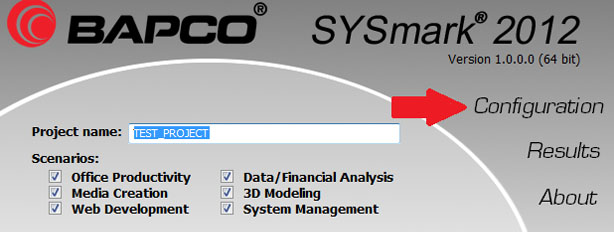

SysMark 2012 - the industry standard benchmark

SysMark 2012 - the industry standard benchmarkWe’re something of a maverick in this respect, though. Most review sites and tech magazines use a ready-made benchmark suite that you can license. These include synthetic tests such as PCMark, but also encompass suites that use real software, such as WorldBench and, more importantly, SysMark.

SysMark is like the industry standard benchmark. It’s not only used by reviewers, but also by PC manufacturers, hardware and software devs and company IT departments. What's more, AMD’s senior vice president and chief marketing officer Nigel Dessau also says that it’s regularly used as a gauge of performance when computer companies compete for government tenders. SysMark is a product of the Business Application Performance Corporation (BAPCo) – a non-profit consortium of hardware and software makers, as well as publishers, that work together and vote on which software and features will be tested on the next version of the SysMark benchmark suite, as well as other benchmarks.

As you can imagine, not all its members agree all the time, and three prominent members have all just resigned from the consortium – AMD, Nvidia and VIA. There are various complaints about the benchmark, but the overriding one is that the workloads recreated by the latest SysMark 2012 benchmark are not representative of how everyone uses their PCs.

As AMD’s Nigel Dessau asked on his blog last year: ’When was the last time you completed a 35,000 row spreadsheet?’

Nvidia, AMD and VIA have all resigned from BAPCo in protest over the tests used in SysMark 2012

Nvidia, AMD and VIA have all resigned from BAPCo in protest over the tests used in SysMark 2012VIA's marketing vice president Richard Brown told us that he had similar feelings about the benchmark. 'We simply believe that the benchmark doesn’t measure the realistic PC usage scenarios that most people encounter,' says Brown. 'If you're running highly sophisticated financial modelling applications, then SysMark 2012 is probably a useful tool for evaluating a new system you're planning to purchase. If, on the other hand, you're a knowledge worker who mainly spends their time processing email, creating spreadsheets, presentations and other similar documents, as well as web browsing, social networking and viewing YouTube videos, then your criteria are different.'

That's not to say that SysMark 2012 doesn't gauge the performance of web browsers. Indeed, the list of software it uses contains both Internet Explorer and Firefox (more on this later). The apparent problem, however, is the priority that some tests are given over others in the final score. 'While SysMark 2012 is marketed as rating performance using 18 applications and 390 measurements,' an AMD spokesperson told us, 'the reality is that only seven applications and less than 10 percent of the total measurements dominate the overall score. So a small class of operations across the entire benchmark influences the overall score.'

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.